To regulate the use of AI while fostering innovation, the European Union adopted the AI Act, establishing specific rules based on the potential risks each AI application might pose to European citizens. The purpose of this Regulation is to ensure AI is used ethically, safely, and transparently while supporting innovation.

Definition of AI under the Regulation

The Regulation applies to « artificial intelligence systems » (or « AI systems ») which means "a machine-based system that is designed to operate with varying levels of autonomy and that may exhibit adaptiveness after deployment, and that, for explicit or implicit objectives, infers, from the input it receives, how to generate outputs such as predictions, content, recommendations, or decisions that can influence physical or virtual environments."

Who are concerned by the AI Act?

The Regulation identifies five types of actors:

- The provider,

- The importer,

- The distributor,

- The product manufacturer,

- The deployer (the entity implementing the AI system).

The Regulation has extraterritorial effect, applying to actors established outside the European Union, including:

- providers who distribute or use AI systems in the EU, regardless of their establishment within or outside the Union;

- deployers of AI systems located in the EU;

- providers and deployers of AI systems located in third countries if the outputs generated by the system are intended for use within the EU;

- importers and distributors of AI systems.

However, this Regulation does not apply to all AI systems or AI models, such as:

- research, testing or development activity regarding AI systems or AI models prior to their being placed on the market or put into service;

- AI systems or AI models, including their output, specifically developed and put into service for the sole purpose of scientific research and development;

- AI systems exclusively designed for military, defense, or national security purposes, regardless of which type of entity is carrying out those activities;

- certain AI systems released under free and open-source licences (unless they are placed on the market or put into service as high-risk AI systems).

Risk Categories and Associated Obligations

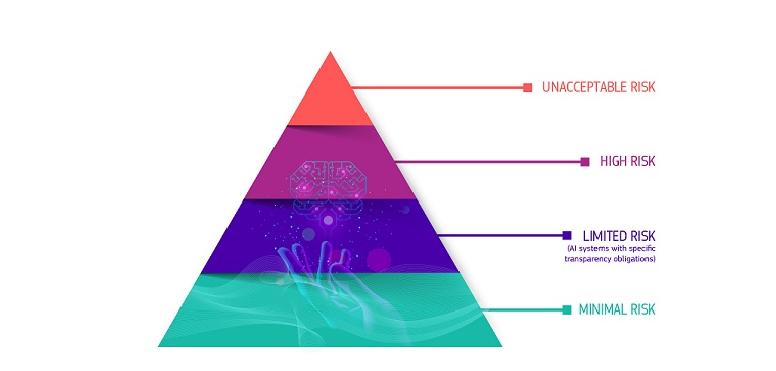

The AI Act introduces a classification of AI systems into four risk categories with varying compliance requirements:

1) Minimal or negligible risk AI systems: The AI Act allows the free use of minimal-risk AI.

Example: Spam filters.

The vast majority of AI systems currently used in the EU fall into this category. These systems may be freely developed and used, provided they comply with applicable laws. Providers of such systems may voluntarily adhere to codes of conduct.

2) Limited risk AI systems: : These systems have a limited impact but present transparency risks.

Examples: Customer service chatbots, "deep fakes."

The Regulation imposes specific transparency obligations to ensure individuals are informed that their interactions are AI-generated.

3) High-risk AI systems: These systems operate in sensitive domains such as health, justice, security, or transportation and may significantly impact citizens' rights and safety. They are therefore subject to strict regulatory frameworks.

Examples: Biometric systems, systems used in recruitment or employee scoring, and enforcement applications.

These systems must comply with stringent governance, transparency, human oversight, and risk management requirements. Providers and deployers must adhere to the following obligations:

| Obligation | Provider | Deployer |

| Conformity Assessment | Conduct a comprehensive assessment before market entry | Verify compliance before deployment |

| TTesting and Certification | Perform safety and performance tests | Ensure tests are conducted and validated |

| Documentation and Traceability | Provide complete technical documentation and reports | Ensure documentation is available and verifiable |

| Continuous Monitoring | Provide tools for continuous monitoring | Implement post-deployment monitoring |

| Transparency and Explainability | Provide information on AI system operation | Ensure transparency and access to explainability for users |

| Updates and Support | Provide updates when necessary | Apply updates and ensure compliance |

| Incident Reporting | Alert deployers in case of malfunction or risk | Notify authorities and users in case of incidents |

4) Unacceptable risk AI systems :These systems are deemed dangerous to individuals' rights and freedoms.

Examples: Social scoring, subliminal techniques, real-time remote biometric identification in public spaces for law enforcement, predictive policing targeting individuals, emotion recognition in workplaces or educational settings.

These technologies are prohibited by the Regulation

(Source : site Internet de la Commission européenne)

General-Purpose AI Models

The AI Act also regulates general-purpose AI models, designed to perform a wide variety of tasks rather than being specific to a single application domain.

General-purpose AI model under the Regulation means « an AI model, including where such an AI model is trained with a large amount of data using self-supervision at scale, that displays significant generality and is capable of competently performing a wide range of distinct tasks regardless of the way the model is placed on the market and that can be integrated into a variety of downstream systems or applications, except AI models that are used for research, development or prototyping activities before they are placed on the market ».

Examples:

- Language models (e.g., ChatGPT) capable of generating texts and images;

- Virtual assistants (e.g., Siri, Alexa);

- Machine learning systems applicable to diverse fields like finance, commerce, logistics, or cybersecurity;

- AI development platforms enabling businesses to create tailored applications.

For this category, the AI Act imposes several levels of obligations, including:

- Transparency obligations for end users,

- Risk management obligations (to minimize risks such as bias, data security, and user rights impact),

- Performance and security evaluation obligations,

- Monitoring obligations to detect issues or deviations,

- Minimum documentation requirements.

Enforcement and implementation timeline

The AI Act entered into force on August 2, 2024, with a phased application:

- February 2, 2025: Prohibition of unacceptable risk AI systems;

- August 2, 2025: General-purpose AI rules take effect, and competent authorities are designated;

- August 2, 2026: Full implementation of all provisions, with regulatory sandboxes established by EU Member States;

- August 2, 2027: High-risk AI rules take effect.

Sanctions

The AI Act provides for sanctions ranging from 1% to 7% of the company’s global annual revenue or fines of €7.5 to €35 million, depending on the nature of non-compliance.

Preparing for Compliance

Businesses developing or using AI systems should proactively align with the Regulation by:

- Identifying and assessing the AI systems used to determine their risk level (e.g., high or low risk);

- Appointing an internal compliance officer for AI;

- Documenting all AI systems in a detailed register (including descriptions, purposes, associated risks, and data used);

- Implementing measures to mitigate identified risks, particularly for high-risk systems;

- Ensuring transparency and security of AI systems;

- Regularly training internal teams on ethical and regulatory requirements;

- Establishing incident management processes and maintaining audit trails;

- Conducting continuous reviews and adapting to legislative developments.